The AI Plateau Is Real — How We Jump To The Next Breakthrough

By Gordon Ritter and Wendy Lu

Imagine asking an LLM for advice on making the perfect pizza, only to have it suggest using glue to help your cheese stick — or watching it fumble basic arithmetic that wouldn’t trip up your average middle school student. Such are the limitations and quirks of generative AI models today.

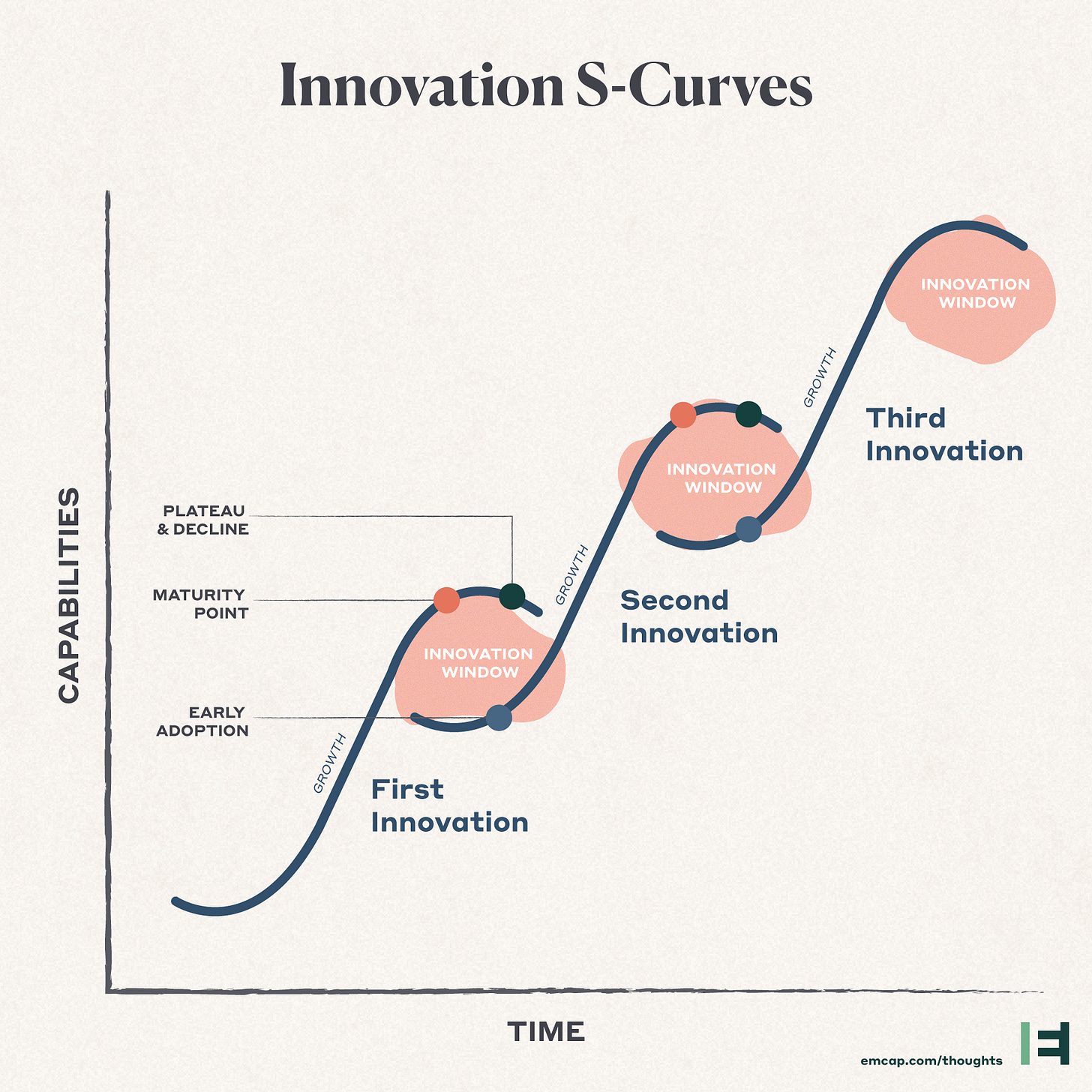

History tells us that technological advancement never happens in a straight line. An almost undetectable buildup of knowledge and craft is met with a spark, resulting in an explosion of innovation, and eventually reaching a plateau. These innovations, across centuries, share a common pattern: The S-Curve. For example:

TCP/IP synthesized several innovations dating to the 1960s. After the initial 1973 release, development significantly accelerated, eventually stabilizing with v4 in 1981, still in use on most of the Internet today.

During the Browser Wars of the late 90s, browser technology experienced significant improvements. A passive terminal became a fast, interactive, and fully programmable platform. Transformation among browsers since then is incremental by comparison.

The launch of the App Store led to an explosion of innovation in mobile apps in the early 2010s. Today, novel mobile products are few-and-far-between.

The AI Plateau

We have just witnessed this exact pattern occur in the AI revolution. Alan Turing was one of the first computer science pioneers to explore how to actually build a thinking machine in a 1950 paper.

Over seventy years later, OpenAI seized on decades of sporadic progress to create a Large Language Model that arguably beats the Turing Test, answering questions in a way that is indistinguishable from a human. (Albeit, still far from perfect.)

When ChatGPT was first released in November 2022, it created a global shockwave. For a while, each subsequent release, and releases of models from other companies like Anthropic, Google and Meta, offered drastic improvements.

These days, the incremental progress of each new LLM release is limited. Consider this chart of performance increases of OpenAI’s flagship model:

Please visit our website to keep reading this post.